As LLMs get embedded deeper into developer workflows- writing code, generating configs, summarizing incidents, teams are moving fast to adopt AI without fully understanding what it breaks. The tools feel great at first, but behind the productivity boost lies a growing set of blind spots.

The security surface is changing. Old attack patterns are resurfacing in new form. And most teams are shipping AI features without the right architecture, oversight, or even a basic threat model.

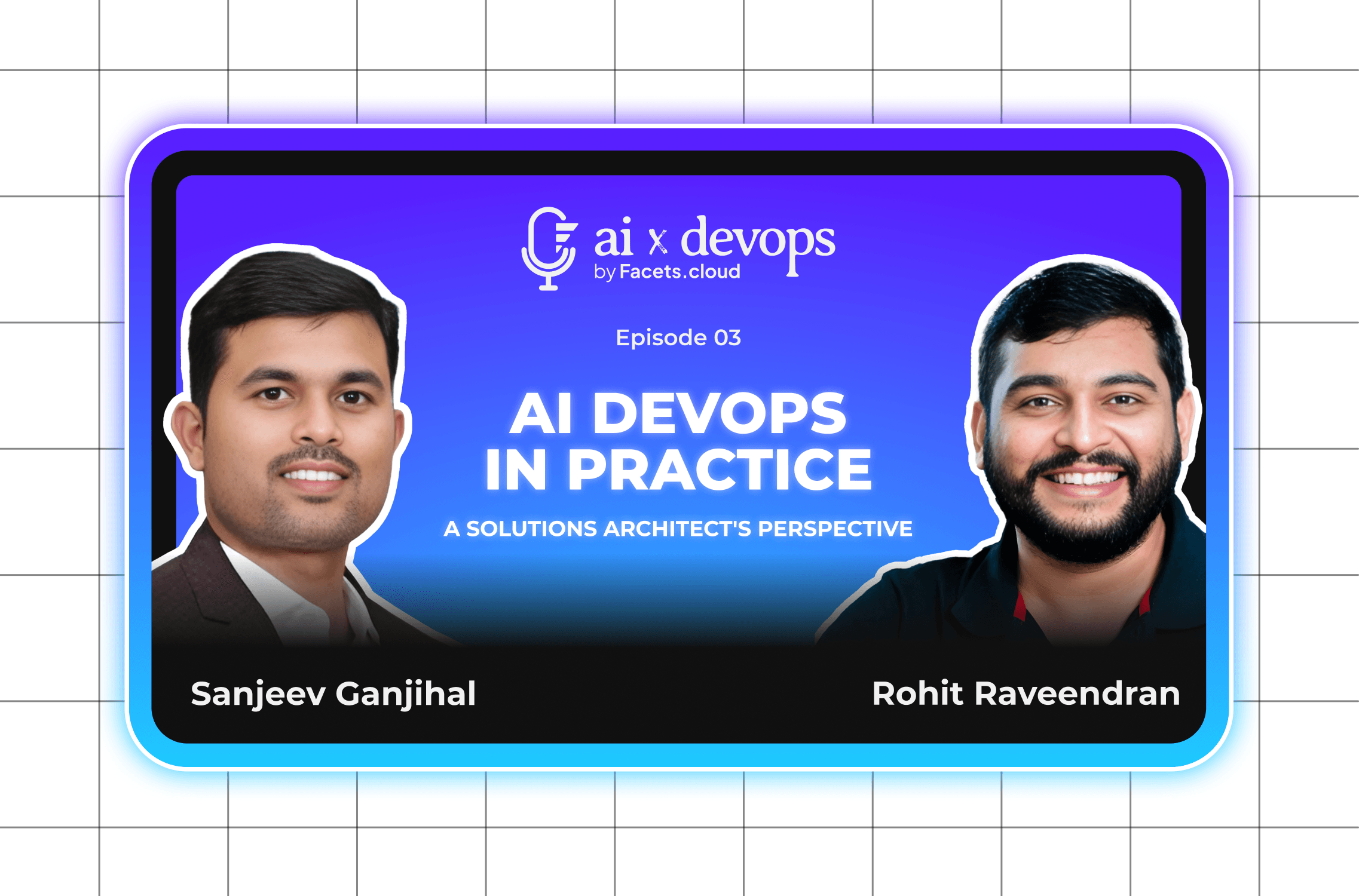

This episode of the AI x DevOps podcast tackles these issues head-on. Rohit, Co-founder of Facets, spoke with Nathan Hamiel, Director of Research at Kudelski Security and the AI/ML track lead at Black Hat. With decades in offensive security and recent focus on AI threats in production systems, Nathan shared a grounded, technical view of how AI is actually performing and where it’s quietly introducing risk.

What you’ll learn:

Where AI-generated code adds overhead instead of saving time

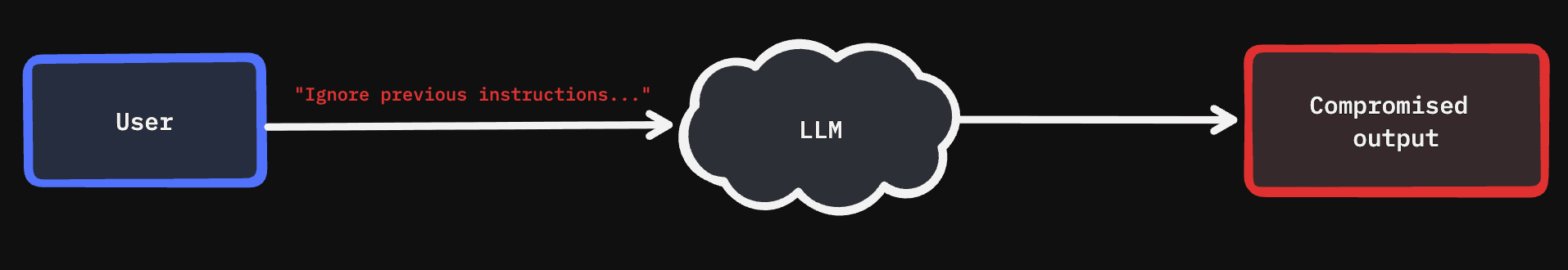

How prompt injection works and why it's difficult to fix at the model level

How to incorporate LLMs into security tooling without breaking trust boundaries

Why architectural decisions matter more than model accuracy in production setups

Key Takeaways:

- AI shifts development effort downstream; saved time in coding often leads to more time in integration and debugging.

- Prompt injection remains a core vulnerability.

- Most vulnerabilities in AI tools stem from poor containment, missing guardrails, or overexposed data.

- Using LLMs to augment deterministic tools like fuzzers or static analysis works well, when scoped carefully.

- RBAC, execution isolation, and sandboxing are critical for AI agents that trigger actions or access internal systems.

- Risk must be evaluated per use case.

Building with AI? Start with a Secure Mindset

We’re in a phase of AI euphoria. LLMs are being woven into products at record speed—writing code, handling data pipelines, powering agents. The results are flashy. But behind the scenes, we’re shipping old problems at new velocity.

“We’re seeing a monumental number of old school vulnerabilities coming back into production because of AI development.”

— Nathan Hamiel, Director of Research, Kudelski Security

This post isn’t about future AI safety or speculation about AGI. It’s about what’s happening now- how security is quietly regressing in the rush to integrate AI.

1. Same Flaws, Shipped Faster

The most common issues we’re encountering aren’t new. They’re not even unique to AI. But the speed and volume of AI-assisted development are resurfacing conventional vulnerabilities in bulk.

Security is about discipline. LLMs don’t replace that. If anything, they require more of it because the illusion of productivity can mask what’s going wrong underneath.

2. 🎯 AI-Native Risks Still Matter

Prompt Injection

The flagship example: users embedding instructions in input that override system behaviour. It’s hard to detect, harder to defend, and totally alien to traditional application threat models.

Hallucinated Dependencies

LLMs can invent package names. Malicious actors can register them. It’s not widespread, but it’s a new class of exploit that didn’t exist before.

These risks don’t replace traditional ones but compound them.

3. Architect for Failure, Not the Demo

Many of the worst vulnerabilities Nathan's team found weren’t clever exploits. They were architectural oversights:

Secrets stored alongside agents

Unrestricted internet access

No validation of what code gets executed

The antidote is architectural discipline. At Facets, we’ve leaned into a deterministic pipeline model where the AI can assist in generating outputs—but the actual execution path remains reviewable, predictable, and safe.

“We don’t let AI make production decisions. It generates outputs that pass through a deterministic pipeline with validations and context-aware safeguards.”

— Rohit Raveendran

This approach doesn’t remove all risk; but it contains it. And that’s the point.

4. Hallucinated Data: A Silent Threat

One overlooked risk isn’t in the code. It’s in the data. AI-generated output is already leaking into public datasets. Mistakes, inaccuracies, half-truths—all of it gets scraped and recycled.

“The hallucinated data of today may end up being the facts of tomorrow.”

— Nathan Hamiel

Once it’s embedded in training data, it becomes harder to correct. Garbage in, model out. Read Nathan's blog

5. No, We’re Not Seeing Exponential Progress

There’s a pervasive belief that AI progress is exponential. The tooling feels impressive—until you scratch beneath the surface.

Each model iteration feels magical at first. But deeper use reveals familiar problems: fragility, unreliability, bloated output, shallow reasoning.

The takeaway: be practical. Ground your decisions in what the model can do, not what the market says it might someday do.

Beyond the Hype Cycle: A Pragmatic View of AI's Future

This blog only scratches the surface of the conversation. In the full episode, Nathan Hamiel dives deeper into:

Why model hallucinations are a long-term reliability risk

The growing security blind spots in MCPs and AI agent stacks

How teams can use existing DevSecOps workflows to safely scale LLM adoption

Why “safe enough” is not a valid approach when AI output affects production systems

▶️ Listen now on [Spotify], [Apple Podcasts], [YouTube] or wherever you get your podcasts.

And if you found the episode useful, share it with your team, especially your security and platform engineers.